You can access the full course here: Hypothesis Testing for Data Science

Table of contents

Part 1

To start this course, we’re going to cover the following topics:

- Random Variables

- Normal Distribution

- Central Limit Theorem

Random Variables

A random variable is a variable whose value is unknown. Namely, the outcome of a statistical experiment.

Consider the example of a single coin toss X. We do not know what X is going to be, though we do know all the possible values it can take (heads or tails), which are called the domain of the function. We also know that each of these possible values has 50% probability of happening, that is p(X = H) = p(X = T) = 1/2.

Similarly, if X now is a single dice toss, we have six different sides (going from 1 to 6) each equally likely:

p(X = 1) = p(X = 2) … = p(X = 6) = 1/6.

Note: X refers to a random variable, while x (lowercase) is usually used for a very specific value.

We can divide random variables into two categories:

- Discrete Random Variable: can only take on a countable number of values (there’s a finite list of results it can take). Examples of this category are coin tosses, dice rolls, number of defective light bulbs in a box of 100.

- Continuous Random Variable: may take on an infinite number of values (vary a lot). Examples of this second category are the heights of human, lengths of flower petals, time to check out an online cart on a website.

In other words, discrete random variables are basically used for properties that are integers or in a situation where you can list out and enumerate the possibilities involved. Continuous variables usually describe properties that are real numbers (such as heights and lengths of objects in general).

Probability Distribution

It’s the representation of random variable values alongside their associated probabilities. We call it probability mass function (pmf) for discrete random variables and probability density function (pdf) for continuous random variables.

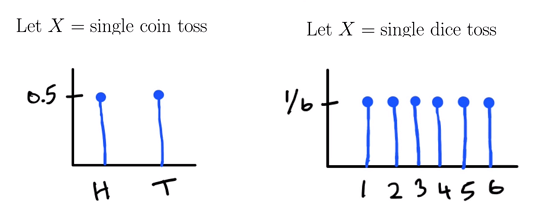

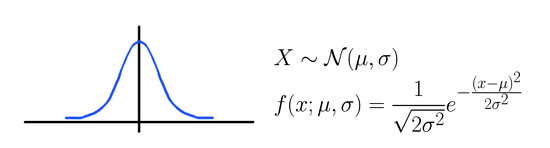

The graphics below are called discrete uniform distributions and they are examples of mass functions, as they associate a probability to each possible discrete outcome. On the other hand, a normal distribution is a form of density function that we’ll see later on.

We see the domain of each function listed on the x-axis (e.g. all the possible outcomes) and the y-axis brings the possibilities for each one of the outcomes.

Rules of Probability Distributions

- All probabilities must be between 0 and 1, inclusive.

- The sum/integral of all probabilities of a random variable must equal 1.

The examples of the image above already show us the application of these two rules. All probabilities listed for the two graphics are between 0 and 1 (1/2 and 1/6), and they all sum up to 1 (1/2 + 1/2 = 2/2 = 1 and with the second example likewise)!

A probability value of zero would be considered as “impossible to happen” and if it is one then it is “certain to happen”.

In the next lesson, we’re going to study the normal distribution.

Part 2

In this lesson, we’re going to talk about the normal distribution.

It is also called the Gaussian distribution, and it’s the most important continuous distribution in all statistics. Many real-world random variables follow the normal distribution: IQs, heights of people, measurement errors, etc.

Normal distributions are influenced by the mean µ (that is the peak, the value that appears the most) and by the standard deviation σ which affects the height of the peak as seen below (σ² stands for variance):

We won’t be getting into details of the formula above though, as computer libraries already do all the work for us. Remember that, from a notation point of view, all capital letters stand for random variables and lowercase letters are actual, specific values.

Let’s take a look at some properties of normal distributions:

- Mean, median and mode are equal (and they are all at the peak)

- Symmetric across the mean (both sides look the same)

- Follows the definition of a probability distribution

- Its largest value is equal or less than 1 and the tails are always above zero (asymptote at the x-axis)

- The area under the curve is equal to one (integral)

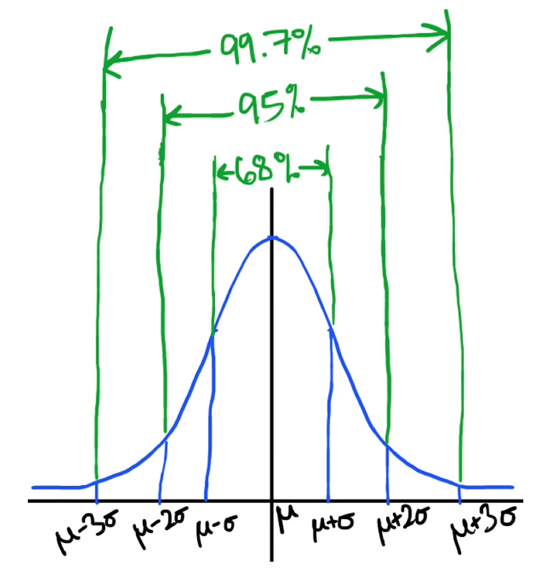

The Empirical Rule (68-95-99.7)

This rule says that 68% of the data in a normal distribution is between +-1 standard deviation of the mean (σ), 95% is between +-2 standard deviations and in up to +- 3σ we have almost everything in the graph included (99.7%):

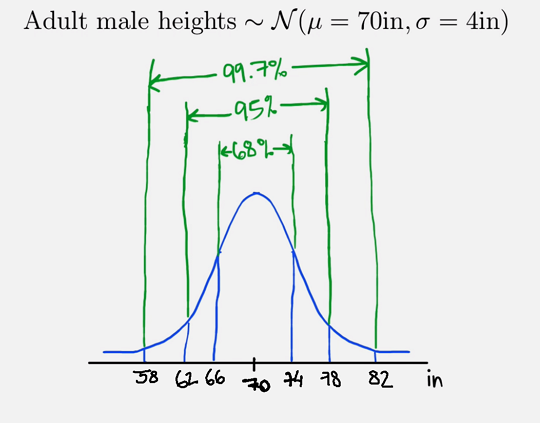

Suppose that we know the normal distribution for adult male heights and that our µ = 70 inches and σ =4 inches. Applying the empirical rule, we have:

That means that 68% of adult males are going to have between 66 and 74 inches of height, 95% are between 62 and 78 inches tall, and almost all adult males are between 58 and 82 inches tall.

Computing Probabilities

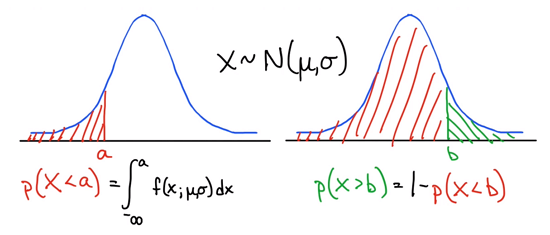

In addition to the probability density function we mentioned in the previous lesson, we also have the cumulative density function (cdf). It is the probability that a random sample drawn from X is less than x: cdf (x) = p(X < x).

An interesting thing here is that we can find this probability by calculating the area under the curve (i.e. the integral). However, if we want the probability for a precise value x then we cannot find the answer as there is no curve (just a point!) for the computation of the area. There’s no density enough to answer that! What this means is that p(X = x) = 0 for any x because there’s no area “under the curve” for a single point!

Note that a probability density function is not a probability, it is a probability density. We have to integrate it in order to have an actual probability.

To the right-hand side of the image above we see that the complement can be applied for probability computations with normal distributions, such that the green area can also be computed by taking the difference between 1 and the value of the red area.

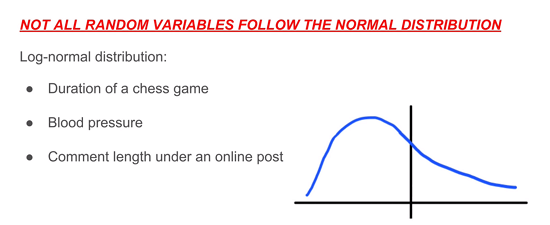

We have to be careful not to assume that everything follows the normal distribution, in fact, we need to present some justification to assume that. There are a lot of different kinds of distribution, such as the log-normal distribution. The example below is clearly not a normal distribution as it is not symmetric (normal distributions are symmetric), it is a log-normal distribution which is followed by some real-world phenomenons such as the duration of a chess game.

Transcript 1

Hello world, and thanks for joining me. My name is Mohit Deshpande, and in this course, we’re gonna learn all about hypothesis testing. We’re gonna be building our own framework for doing this hypothesis testing. That way, you’ll be able to use it on your own data in your own samples, and you’ll be able to validate your own hypotheses.

So the big concepts that we’re gonna be learning about in this course, we’re gonna learn a little bit about probability distribution. We’ll talk about random variables and what a probability distribution actually is. We’ll talk about some very important distributions, like the Gaussian distribution and the normal distribution, that kind of serve as the backbone for z-tests and eventually t-tests.

And then we’re gonna get on to the actual hypothesis testing section of this, which is gonna include z-tests and t-tests and they’re really ways that we can have a claim and then back it up with statistical evidence. And so we’re gonna learn about how we can do that as well as the different conditions where we might wanna use one or the other, and all of our hypothesis testing examples are gonna be chock full of different examples so that you get practice with running hypothesis tests, and in our frameworks, we’re also gonna use code, and so you will get used to using code to validate hypotheses as well.

So we’re gonna take the math that we learned in this, which is not gonna be that much, and then we’re gonna apply it to, and we’re gonna implement that in code as well, and then we’re gonna use all this to build our framework and then answer some real-world questions and validate real-world hypotheses. We’ve been making courses since 2012, and we’re super excited to have you on board.

Online courses are a great way to learn new skills, and I take a lot of online courses myself. ZENVA courses consist mainly of video lessons that you can watch and rewatch as many times as you want. We also have downloadable source code and project files that contain everything that we build in the lessons. It’s highly recommended that you code along. In my experience, it’s the best way to learn something, is to get your hands dirty. And lastly, we see the students who get the most out of these online courses are the same students that make a weekly plan and stick with it, depending, of course, on your own availability and learning style.

So at ZENVA, over the past six years, has taught all kinds of different topics on programming and game development to over 300,000 students. This is across a hundred courses. These skills that they learn in these courses, by the way, are completely transferrable to other domains. In fact, some of the students have used these skills that they’ve learned to advance their careers, to make a startup, or to publish their own content from the skills that they’ve learned in these course.

Thanks again for joining, and I look forward to seeing all the cool stuff you’ll be building. And without further ado, let’s get started.

Transcript 2

Hello everybody. We are going to talk about hypothesis testing. But before we quite get into that, we have to know a little bit of background information in order to do hypothesis testing.

In specific, we have to know a little bit about random variables and probability distributions, as well as a very important distribution called the normal distribution, as well as a very important theorem called the central limit theorem. And all these things gonna to tie together when we get into doing hypothesis testing. We’re going to use all these quite extensively. So the first thing we need to talk about are random variables.

So, a random variable is just a variable whose value is unknown. Another way you can think about this is, a variable that is the outcome of some kind of statistical experiment. So I have two examples here, say Let X equal a single coin toss. So we don’t know what X, we don’t know what the value of X is, but we know all of the possible values that it can take on. We just don’t know what the actual value is, because it is a random variable.

But we can say that, well the probability that X is gonna be heads is a half, the probability that X is gonna be tails is also a half. We don’t know what the actual value is, but we know about all the values it can take on. In other words, we call this the domain of a random variable. For these variables here, it is the different values that it can take. So, think of a dice toss that I have here. We have possible values here that X can be one, two, three, four, five, or six, and each of these are equally likely. And just a point on notation is that this capital X is the random variable, if you see lowercase x that usually means a very specific value of the random variable.

Speaking of random variables, we can broadly separate them into two different categories. We have discrete random variables and continuous random variables. So discrete random variables, as the name implies, can only take on a countable number of values. So, picture things like doing a coin toss or a dice roll. They’re very discrete number values. Using this interesting example, that’s used a lot in statistics textbooks, it’s a seminal problem that you’ll see in statistics textbooks.

If you’ve taken a course on statistics, you’ll probably have some question like this, the number of defective light bulbs in a box of 100. So the different outcomes here are: Light bulb one is defective or not, light bulb two is defective or not, light bulb three is defective, and so on and so on. This is an example of a discrete random variable. So, we have discrete random variables and we also have continuous random variables, and these random variables can take on an infinite number of values, within a given range. So, things like the heights of humans is continuous, things like the lengths of flower petals, or the time to checkout an online cart if you’re buying something from an online retailer, the time to checkout is also an example of a continuous random variable.

Right, so think of things that are real numbers for example. Usually continuous random variables describe properties that are real numbers. So heights of humans for example, are real numbers, they will be measured in feet and inches or centimeters. They can take on an infinite number of values within a particular range, or they don’t even have to be bounded, they can just go from negative infinity to positive infinity, it depends.

And discrete random variables then, can usually describe properties of things that are integers or things that you can actually list out and innumerate. So that’s just kind of the way you can think of it if you ever have a question of whether a variable is discrete or continuous, think about what its possible values could be. Can it take on an infinite number of values? If so, then it’s usually gonna be a continuous random variable.

Okay, so now that we know what random variables are, let’s talk a little bit about what a probability distribution actually is. So it’s really just a representation of the random variable values and their associated probabilities. So you’re gonna encounter two things, different kinds of function, there’s probability mass function, we say (PMF) for discrete random variables. We have probability density function for continuous random variables. So let me use an example. So here’s a probability distribution for a single coin toss. So on the X-axis we have all of the different possibilities, in other words the domain of the random variables. So heads and tails are the only two possible values here.

And on the Y-axis are their associated probabilities. So heads has a probability of 0.5 or half, tails has a probability of 0.5 or a half. In another example, we have the single toss of a six-sided dice. Again, we have put numbers one, two, three, four, five, six and their associated probabilities. Each of them have a probability of 1/6. So these are examples of, actually these two are examples of something called a uniform distribution.

Now, for a uniform distribution, each outcome is equally likely. So for heads and tails, they’re both equally likely. For each of the dice toss for a six-sided dice, each of these outcomes are equally likely. So we call these a uniform distribution and specifically the discrete uniform distribution. And these two are examples of probability mass functions, because they associate a probability to each possible discrete outcome. When we talk about the normal distribution soon, that is going to be an example of a continuous distribution. So we can’t talk about PMFs, probability mass function, we have to talk about the (PDF), or probability density function.

So, this is really just what a probability distribution is and it’s quite easily representable in a nice picture, pictoral format. I think it tends to work quite well for showing what actually is going on with these probabilities. So now, these probabilities are great, but they have some rules. So let’s talk a little bit about some of the rules of these probability distributions. So, all of the probabilities in a probability distribution have to be between zero and one.

Probabilities in general have to be between zero and one, including both zero and one. Right, so the probability of zero is impossible, the probability of one is certain. Anything that goes outside of those ranges doesn’t really make sense in terms of probabilities. The other thing is that the sum or the integral over the domain of the random variable in other words, all the probabilities of a random variable, that has to equal one. So if we’re talking about discrete random variables that’s a sum, if we’re talking about continuous random variables that’s the integral. But don’t worry, we’re not gonna use any calculus.

So what I mean by that is we look at the possible outcomes of heads and tails, if we summed them up we should get one. Intuitively you can think of this as, we’re guaranteed to observe something, something is gonna happen. If we do a coin toss, we’re either gonna get heads or tails. That’s essentially what the second rule of the probability distributions is trying to say, is if we perform or if we look at our random variable and it’s a coin toss or a dice toss or something, we’re guaranteed that something is gonna happen. That’s why the sum has to, that’s why everything has a sum of one.

And you can see for the dice toss, 1/6 plus 1/6 plus 1/6 plus 1/6 plus 1/6 plus 1/6. That’s 6/6, in other words, one. So, also these are actually probability distributions. Because all the probabilities are between zero and one, and they sum up to one. If we had a continuous distribution, we would use the integral. So, that is where I’m gonna stop here for random variables.

So this is just to introduce you to the concept of what is a random variable and what are probability distributions to begin with. And then, now we’re gonna look at some very important distribution theorems in statistics that even allow you to do hypothesis testing.

Okay, so just to give you a quick recap, we talked about what a random variable is, a variable whose value is the outcome of some kind of statistical experiment. In other words, we don’t really know what the value itself is, but we know about the different values that it can take. And we talked about the different kinds of random variables, discrete and continuous, talked a little bit about what probability distributions are, they’re just representations of the probabilities and then they’re actually the domains of the random variables and their associated probabilities.

And we talked a little bit about some of the rules. Probabilities have to be between zero and one, and they have to sum up to, all the probabilities have to sum up to one. So that is all with with random variables. And so we’re gonna get into probably the most important distribution using statistics and many other fields, called the normal distribution.

Transcript 3

In this video we are going to talk about the most important distribution in all of statistics and probably in many many other fields called the Normal Distribution.

So like I said, it’s the most important continuous distribution that you’ll ever encounter. It’s used in so many different fields: biology, sociology, finance, engineering, medicine, it’s just, so ubiquitous throughout so many fields, I think a good understanding of it is very transferrable. Sometimes you also hear it called the Gaussian Distribution. They’re just two words for the same distribution. And as it turns out, another reason why it’s so important is that it turns out many real world random variables actually follow this Normal Distribution.

So if you look at things like the IQs of people, heights of people, measurement errors by different kinds of instruments. These can all be modeled really nicely with a Normal Distribution. And we know a lot about the Normal Distribution both statistically and mathematically. So here’s a picture of what it looks like at the bottom there.

Sometimes you’ll also hear it called a bell curve ’cause it kind of looks like the curve of a bell. And it’s parametrized by two things, that’s the mean, which is that lowercase u, in other words, the average or expected value. What that denotes is where the peak is, all normal distributions have a kind of peak, so the mean just tells you where that peak is. And then we have the standard deviation, which is the lowercase sigma. Sometimes you also see it written as sigma squared, which is called the variance.

The standard deviation tells us the spread of the data away from the mean. Basically it just means how peak-y is it? Is it kind of flat, or is it very peak-y? That’s really what the standard deviation is telling you. And here is the probability density function, it looks kind of like, kind of complicated there. But if you were to run that through some sort of graphing program, given a mean and a standard deviation, it would produce this graph.

Fortunately there are libraries that compute this for us so we don’t have to, we don’t have to look into this too much. So, another notation point I should mention is that capital X, capital letters are usually random variable and lowercase letters are an actual, specific value. That’s just a notation point. So let’s talk a little bit about some of the properties of the Normal Distribution. So, mean, median, and mode are all equal, and they’re all at the peak, we call it the peak. So the peak is the mean, as we’ve said, and the location.

Another really nice property is that it’s perfectly symmetric across the mean. And that’s also going to be useful for hypothesis testing because if we know particular value to the right of the curve, if you take the negative of that around the mean, then we’ll know what the value on the other side of the curve is.

And by the way, this is a true probability distribution, so the largest value is going to be less than one, the tails are always above zero. If you’ve heard this word before, asymptote, that’s what they are, they’re asymptotes at the x-axis. They get really, infinitely close, but never quite touch zero. And if you were to take the integral of this, it would actually equal one. It’s called the Gaussian Integral, in case you’re interested. Another neat property of the Normal Distribution is called the Empirical Rule, and this is just mostly used in rule of thumb, and the neat thing about this is that it works for any mean and any standard deviation.

It will always be true that about 68% of the data are gonna be within plus minus one standard deviation of the mean. About 95% of the data are gonna be between plus minus two, and 99.7 between plus minus three. And later, when we get to some code, we’re gonna verify this so that you don’t just think I’m making these numbers up. We’ll verify this with a scientific library. And, so again, this is just a rule that, a nice rule of thumb to know, if you wanna do some kind of back of the hand calculations, for example.

So let me put actual numbers to this, all right? So suppose that I happen to know what the distribution for adult male heights are, and that is in, they’re in a mean of 70 inches, and a standard deviation of four inches. Well then I can be sure that if just, just asking one random person, 68% of people are gonna be between 66 inches and 74 inches of height. And by the time I hit plus minus three standard deviations, between 58 inches and 82 inches, 99.7% of people are gonna be within that range.

And again, this is also gonna be useful for hypothesis testing because if we encounter someone who’s, let’s say, like 90-92 inches, very very tall, we know that that’s a data point that we didn’t expect, that’s in the 0.3% of data, approximately, so this’ll be useful when we get over to hypothesis testing, because that’s kind of an abnormal, or unusual, actually unusual value, and that might be an indicator as to whether this mean is correct or not, or maybe it’s, actually maybe we think that, maybe the value that we think is the mean is not actually the mean, in fact, maybe it should be a little higher, for example. So again, this will all become clear when we do hypothesis testing, but this is just a good rule to know.

Alright, so how do we actually compute probabilities of this with the, discreet random variables you just look at the different outcomes and they tell you what the probabilities are. This is not the case for continuous random variables. It’s a bit more complicated. But again, we’re gonna have libraries that can compute this for us. So, in addition to the probability density function, we have the cumulative density function called the CDF, and what the cdf(x) represents, that’s a lowercase x, is equal to the probability that my random variable takes a value that is less than x.

In other words, if I just pick a random sample out of my probability distribution, the cumulative density function here will tell me the likelihood that I observe that value or less than that value. And, you do some mathematics, it’s actually equal to the area under this curve. In other words, it’s equal to the integral. Again, we’re not going to be doing any calculus, so don’t worry.

So, if I want to know, again, suppose this is, this example of heights, suppose I want to know, if I were to just ask some random person, wanted to ask them, hey, what’s your height? I want to figure out what is the probability that they’re going to be at least 62 inches tall. Well, how I do that is I’d use the Cumulative Density Function, the CDF, and plug in cdf(62), and that’ll tell me what the probability is that if a random, if I asked a random person what their height is, the likelihood that they’re going to be at least 62 inches tall. That’s really all the Cumulative Density Function tells us. The interesting point is that, I can’t ask, what is the probability that I encounter someone that’s exactly 62 inches.

I can’t ask that question because if we go by our cumulative density function, there’s no area under a curve, because it’s not a curve, it’s just a point, there’s no density to it, right? So how we compute this, we actually integrate this density function. But, we can’t do that, because we don’t have a point there, intuitively, you can think of this as, we have, if we compute using the definition of probability, it’s what are the outcomes where this is true, well it’s one, divided by what’s all possible outcomes it could take, it can take on an infinite amount of outcomes! So, hence, it’s equal to zero for any particular x.

One important thing to note, is that the Probability Density Function is not a probability, it’s a probability density, so you have to integrate it in order to get an actual probability. Okay, that’s a lot of words. So the other picture that I have here on the right is to show you that complementation, or the compliment, still holds for continuous density functions. So, suppose that I want to know, well, what’s the likelihood that I encounter someone that is taller than 82 inches? Well the CDF only tells me from negative infinity up to 82 inches. How am I gonna know, how do I compute values greater than that? Well, I can take the compliment because the probability that x is greater than some value b is going to be equal to one minus the probability that x is less than b.

By the way, we don’t have to be too worried about less than or equal to’s. So I could’ve also said, probably, that x is greater than or equal to, it doesn’t really matter because, because of this second bullet point here, that the probability of capital x equals lowercase x, is equal to zero for any x, there’s no area under that curve, so, we can kind of forego the less than or equal to’s. So if I want to compute the probability that I encounter someone that is taller than 82 inches that’s equal to one minus the probability that I encounter someone that’s less than 82 inches, they’re just compliments of each other. Okay, so that’s how we’d compute probabilities, and don’t worry, we don’t have to do this integral ourselves, there are library functions that can do this for us.

So one last point that I want to impart on you is that not all variables follow the Normal Distribution. Many scientific papers, or in many things you’ll read, that people assume the Normal Distribution, but you need some kind of justification to assume that, and one thing that we’ll talk about called the Central Limit Theorem, kind of gives us a justification to assume normal distributions under a very specific set of conditions.

But you cannot just assume that, oh this variable must follow the Normal Distribution ’cause it’s used everywhere! It’s not something that we can assume. In fact, there’s lots of distributions that don’t follow the Normal Distribution. For example, here I have a picture of what’s called a Log-normal Distribution. As you can see, it’s not a normal distribution, because, first of all, easy way to look at it is it’s not symmetric. Normal distributions have to be symmetric, it’s not. But, it turns out that real world phenomenons still follow this.

For example, the duration of chess game actually follows a log-normal distribution. Things like blood pressure follow the Log-normal Distribution. Things like comment length, as in how long a comment is that people leave under some kind of online post, that also follows the Log-normal Distribution. So, a lot of random variables can follow other distribution, that’s okay, we have a lot of distributions. But, you can’t just assume that they follow the Normal Distribution without doing some kind of, presenting some kind of justification. So, that’s just something to keep in mind.

Alright, so this was just a pre-cursor to the Normal Distribution, we talked a little bit about what it was, and some of the nice properties of the Normal Distribution, we talked, again, about the Empirical Rule, as well as how we can compute probabilities from a continuous probability distribution, it’s not as easy with a discreet one. So, now that we have a little bit of information about the Normal Distribution, let’s actually see it in practice.

Interested in continuing? Check out the full Hypothesis Testing for Data Science course, which is part of our Data Science Mini-Degree.