In this tutorial, we’re going to explore the OpenAI API and its parameters to customize a chatbot. By understanding how to use system messages, temperature, maximum tokens, and number of responses, you can create more dynamic and context-appropriate replies from your AI chatbot. This will enhance your AI implementation in various applications such as customer support, mentoring, and any other user interaction scenarios.

Before we dive in, it would be helpful if you’re familiar with Python programming. And while the tutorial uses the OpenAI API, the concepts can be applied to other chatbot APIs as well.

Table of contents

Project Files

Download Project Files Here

ChatGPT API Parameters

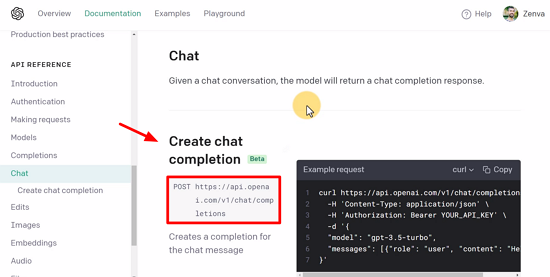

In this lesson, we will be looking at the API documentation and the parameters we can use to customize the chatbot.

API Reference

If you go to https://platform.openai.com/docs/api-reference/chat, you can check the endpoint that is used to make requests to the API. Our Python code makes it easier for us to perform API calls, but here we can see what’s going on behind the scenes. This endpoint can be used directly with any programming language or with command line tools, such as curl:

We can further explore the parameters needed, such as the model (which may have a newer version by the time you watch this lesson) and so on.

System Messages

Let’s now take a look at system messages. They are messages that can be passed to the AI to provide it with context as to what its role should be. So, you can tell it that it is a CTO mentoring developers and that it should also ask questions:

import openai

# pass the api key

openai.api_key = 'YOUR_KEY_HERE'

# define prompt

messages = []

messages.append({'role': 'system', 'content': 'you are a CTO mentoring developers, dont only provide answers also ask guiding questions'})

messages.append({'role': 'user', 'content': 'why is my website down?'})

# make an api call

response = openai.ChatCompletion.create(model='gpt-3.5-turbo', messages=messages)

# print the response

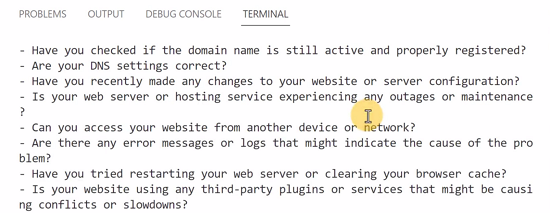

print(response.choices[0].message.content)Note how our response is now structured differently than before:

It is now making suggestions as to what could be wrong with the website in the form of actionable questions. This can be useful when developing business applications or any product, as it allows you to narrow down the context in which you want your AI to communicate with users. You could use the example above in a customer support scenario, for instance. In this sense, feel free to experiment and see what kind of answers you can come up with!

Challenge

Try going over the other optional parameters we have for the chat completion create function and implementing them yourself as a challenge, then come back to check our take on it.

Temperature Parameter

The temperature parameter allows you to set how random or how deterministic you want your responses to be. If you set it to zero, you will always get the same response:

response = openai.ChatCompletion.create(model='gpt-3.5-turbo', messages=messages, temperature=0)

If you set it to one, you will get some randomness already and if you set it to two then the responses will tend to vary a lot.

Number of Responses

The number of choices (n) parameter allows you to specify how many responses you would like to receive:

response = openai.ChatCompletion.create(model='gpt-3.5-turbo', messages=messages, n=2)

This way, you could print out both messages from choices[0] as well as choices[1] as you now have two messages.

Maximum Tokens Parameter

The max_tokens parameter allows you to specify the maximum number of tokens that can be returned by the model:

response = openai.ChatCompletion.create(model='gpt-3.5-turbo', messages=messages, n=2, max_tokens=100)

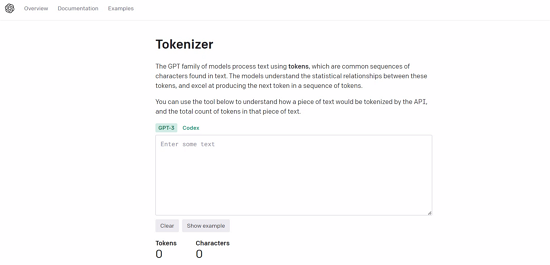

This limits how long or short your response will be. Remember that you’re going to be charged based on the number of tokens you use as well. A token can be a word, a character, or even less than one character in some languages other than English.

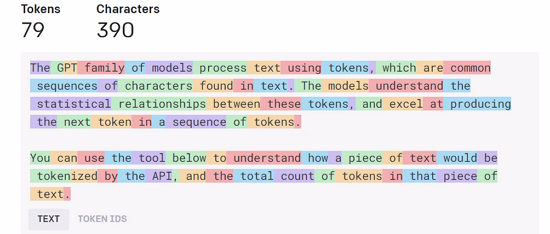

To know how many tokens a text has, you can use the Tokenizer tool by OpenAI (available at https://platform.openai.com/tokenizer):

If you enter a text, it’ll show you all the tokens that are in that text:

And that’s it for this lesson! Hopefully, it has given you a better understanding of how the API works and the options available to you. In the next lesson, you’ll learn how to handle errors in your code.

Conclusion

You’ve now learned about the different parameters and tools that can customize the OpenAI chatbot’s behavior, providing you with more control over its responses. We’ve covered system messages, temperature, the number of responses, and maximum tokens parameter while also touching upon the Tokenizer tool by OpenAI. These concepts will prove valuable when implementing your AI chatbot in various applications and improving its user interaction quality.

Feel free to expand your knowledge in the domain of AI chatbots and experiment with different use cases. Remember that the key to a successful AI chatbot integration is to always align its behavior to the context of the application. Good luck with your future projects, and we hope you’ve learned some valuable information today!

Want to learn more? Try our complete CREATE A BOT WITH PYTHON AND CHATGPT course.

Did you come across any errors in this tutorial? Please let us know by completing this form and we’ll look into it!

FINAL DAYS: Unlock coding courses in Unity, Godot, Unreal, Python and more.